Where are my CLI args?

MP 93: Some surprising behavior from pdb.

Note: I've been busy this week preparing a talk for PyCon next month, and a local presentation on the same topic. The ongoing series about building a Django project from a single file will continue next week.

I was resuming work on an old project this morning, and I wasn't getting the output I was expecting when running the project's main program against newly-updated data. I thought it had something to do with one of the CLI arguments, but the argument I was passing didn't seem to affect the program's behavior at all.

I wasn't sure the CLI argument was actually being processed, so I inserted a breakpoint right after the call to parser.parse_args(). I was surprised to find that the argument I was passing wasn't being processed at all. Or at least, that's what it looked like.

In this post I'll share a little background about this project, and explain why it only looked like the CLI arguments weren't being handled properly.

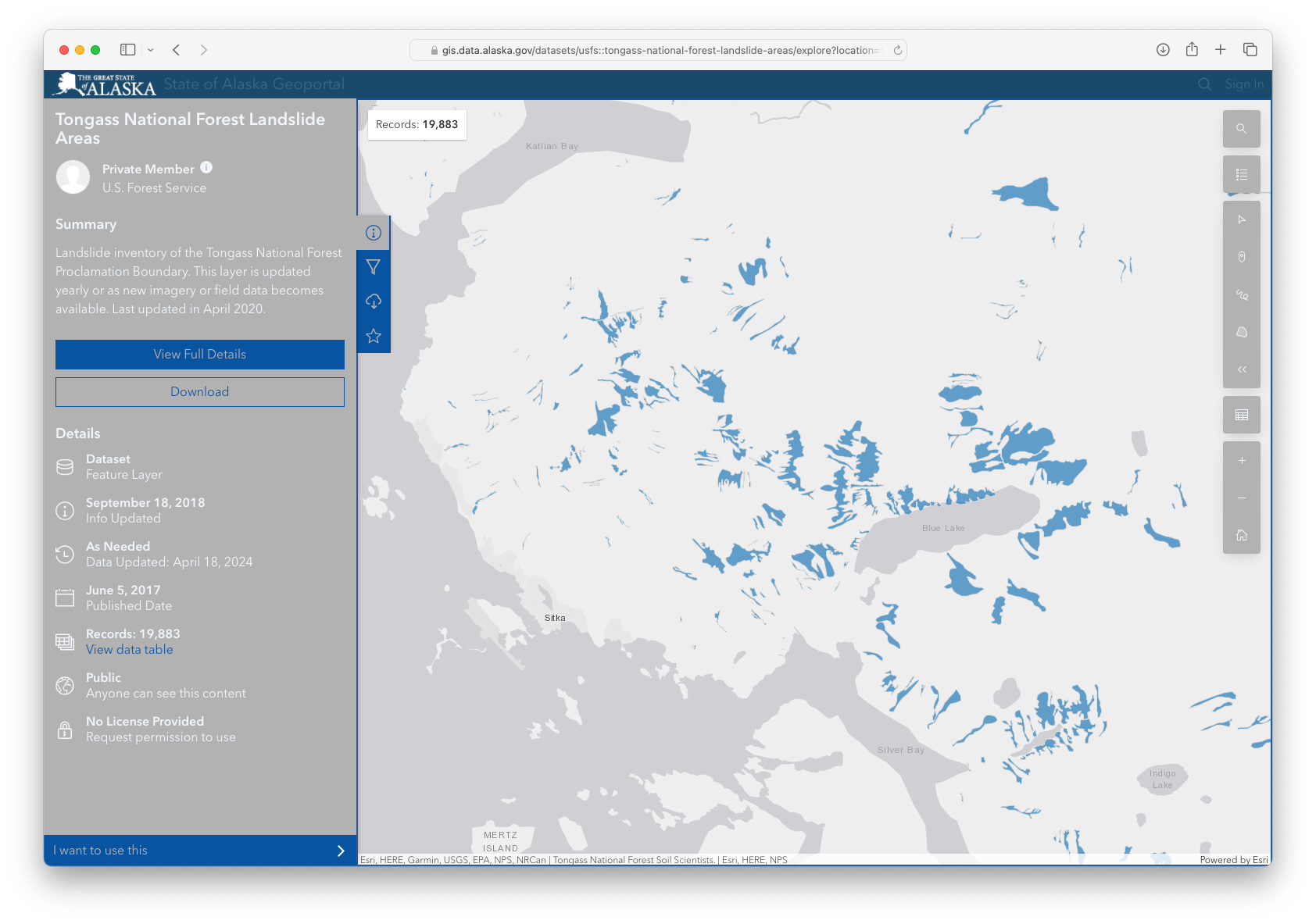

Landslides in southeast Alaska

Many people think of Alaska as cold and snowy, but southeast Alaska is actually a temperate rainforest. The proximity of the Pacific Ocean provides a steady source of moisture, and it moderates temperatures here as well. As a result, we get steady rain throughout most of the year.

Steady rain throughout the year also means heavy rains at times, especially in the fall. Heavy rain on steep mountain slopes can lead to landslides, and that dynamic has shaped the landscape in this region as long as humans have been here. Climate change, however, has made landslides happen more frequently, and with higher consequence.

I'm giving a talk at PyCon next month about using river activity in small watersheds to monitor landslide risk in southeast Alaska. I'm also giving a presentation in my hometown next week about a monitoring project I've been working on for almost ten years now.

The direct cause of landslides is an increase in soil moisture content to the point where soil on a slope no longer adheres to the underlying layers on a mountainside. The most accurate approach to landslide monitoring focuses on measuring soil moisture content directly. But that approach is difficult and laborious; you need to place sensors directly in the soil on every slope you want to monitor. This involves remote sensing, and maintaining monitoring equipment in locations that are often difficult to reach.

Other approaches to landslide monitoring involve measuring precipitation, and correlating that to soil moisture content and landslide activity.

A watershed-based approach to landslide monitoring

My home town, Sitka, is surrounded by steep mountains. Our rivers are quite short. For many of them, you can hike from the ocean to the headwaters in a day if you're happy to do some serious bushwhacking. When Sitka was hit by a fatal landslide in 2015, a number of us started wondering if changes in river activity could be used to indirectly assess landslide risk. We had been in the mountains during periods of heavy rain, and noticed that the rivers rose quite noticeably during rain events that were associated with landslide activity.

When it rains heavily in a small watershed, the river rises quickly and begins to recede shortly after the rain lets up. We wondered if these changes could be correlated with landslide activity. Fortunately the main river in our area has a stream gauge that's been maintained for decades, and all the data from it is publicly available.

The two factors we wanted to look at were the rate of change in the river height, and the total rise of the river. Brief heavy rain causes a rapid rise in the river, but the river doesn't rise much overall. This seems to correlate with the mountains quickly shedding moisture, and a low risk of landslides. Extended periods of moderate rain can cause a significant rise in the river, but without any significant overall rise. This seems to correlate with the mountains shedding water at a steady, sustainable rate. Extended periods of heavy rain result in a rapid rise in the river's height, with a significant total rise in the river's height. This seems to correlate to the mountains not being able to shed water as fast as it's falling, which is exactly what causes landslides to occur.

I wrote a program to run through all the river gage readings over a roughly five-year period, during which a number of landslides had been known to occur. The program would flag any periods where a total rise of 2.5 feet occurred, with an average rate of rise of at least 6 inches per hour. In the initial analysis period, this happened 9 times; 3 of those events were associated with known landslides. This led to the development of a real-time monitoring tool.

Back to those CLI args

I haven't done much with this project in the last few years. One of the challenges of landslide monitoring is that events are relatively infrequent, but have significant impact on individuals and communities. It takes years to evaluate the effectiveness of a real-time monitoring system.

Most of my work has been limited to posting notices when conditions in the mountains are such that the model is almost certainly invalid. For example, landslides are extremely unlikely to occur during the winter. This model is unreliable for much of the winter, and into the spring when the snowpack affects drainage patterns in the mountains.

This morning I set out to update the long-term analysis of this approach to monitoring. We've had a number of heavy rain events since I last ran the analysis in early 2021, and I was curious to see how many critical events had occurred, and how many were associated with known slide events.

When I first ran the analysis program, I got an error:

$ python process_hx_data.py Parsing raw data files... Traceback (most recent call last): ... get_readings_from_data_file return all_readings ^^^^^^^^^^^^ UnboundLocalError: cannot access local variable 'all_readings'...

This wasn't too surprising. I remembered that I had written the program to use cached data during development work, and I couldn't remember if that was the default behavior or if I needed to pass a CLI argument to process the new data.

Here's the relevant code for the CLI arguments. I wanted to make sure the CLI arguments were being processed correctly, so I inserted a breakpoint immediately after the call to parse_args():

parser = argparse.ArgumentParser() parser.add_argument('--no-interactive-plots', ... parser.add_argument('--use-cached-data', help="Use pickled data; don't parse raw data files.", action='store_true') args = parser.parse_args() breakpoint()

In the debugger, I tried to examine the value of args, and of args.use_cached_data:

$ python process_hx_data.py ... -> def process_hx_data(root_output_directory=''): (Pdb) args (Pdb) args.use_cached_data (Pdb)

Nothing! That was a little surprising. I tried again, this time passing the argument --use-cached-data:

$ python process_hx_data.py --use-cached-data ... -> def process_hx_data(root_output_directory=''): (Pdb) args (Pdb) args.use_cached_data (Pdb)

Nothing again! This was really confusing. I forget exactly how argparse works when I haven't used it for a while. I thought maybe args is just empty if no CLI arguments are passed. But when I pass --use-cached-data, args should certainly be defined. I should see True for args.use_cached_data.

Falling back to print debugging

I used print debugging for many years before I started using pdb, so I replaced the breakpoint with a print() call:

parser = argparse.ArgumentParser() ... parser.add_argument('--use-cached-data', help="Use pickled data; don't parse raw data files.", action='store_true') args = parser.parse_args() print(args)

This generated much different output than what I was seeing in the debugger:

$ python process_hx_data.py Namespace(no_interactive_plots=False, no_static_plots=False, use_cached_data=False) Parsing raw data files... Traceback (most recent call last): ... UnboundLocalError...

The print() call shows that args is an argparse.Namespace object, and we can see the default values of all three CLI arguments.

Here's the output when --use-cached-data is passed in:

$ python process_hx_data.py --use-cached-data Namespace(no_interactive_plots=False, no_static_plots=False, use_cached_data=True) Reading data from pickled files... Generating interactive plots... ...

Looking at the Namespace object, we can see that use_cached_data is indeed set to True.

What was going on with the pdb session? I tried replacing the print() call with a breakpoint again, but still got no output for these values in the debugging session.

pdb help

I looked at the documentation page for pdb, and didn't see anything that would explain this behavior. Then I ran help in a debugging session:

(Pdb) help Documented commands (type help <topic>): ======================================== EOF c d h list q rv undisplay a cl debug help ll quit s unt alias clear disable ignore longlist r source until args commands display interact n restart step up b condition down j next return tbreak w break cont enable jump p retval u whatis bt continue exit l pp run unalias where Miscellaneous help topics: ========================== exec pdb (Pdb)

Oh! There's a pdb command named args!

Let's see what it does:

(Pdb) help args Usage: a(rgs) Print the argument list of the current function.

That's good to know! In a debugging session, args displays the list of arguments associated with the current function. My breakpoint was outside of any function, so there are no arguments to display.

To make sure I was understanding this right, I put a breakpoint on the first line of the main function in the file:

def process_hx_data(root_output_directory=''): """Process all historical data in ir_data_clean/. ... """ breakpoint()

If I call args in the debugging session now, I should see something about the argument root_output_directory:

$ python process_hx_data.py -> slides_file = 'known_slides/known_slides.json' (Pdb) args root_output_directory = '' (Pdb)

In this debugger session, args is showing that process_hx_data() was called with no argument for the parameter root_output_directory.

That's it. In previous debugger sessions, instead of looking at the value of args I had defined to hold the parsed CLI arguments, args was showing that there were no arguments in the current function.

Conclusions

This was an interesting edge case to run into. The standard usage of argparse involves the line

args = parser.parseargs()

I wonder how many people have tried to look at the value of args by putting a breakpoint after this line, and run into this very issue.

This has made me look more closely at the full set of commands that pdb makes available. Most of them are names I probably wouldn't use in a file, but there's a few that I could see using. I can imagine using display in a program to refer to some kind of screen object. I might be tempted to use restart for a boolean about whether some process should be restarted or not. If I do use these names, I'll try to remember to run help again if I see some odd behavior in a debugging session.

I'm pretty sure the actual issue in my program had nothing to do with CLI arguments now. I haven't finished debugging yet, but I'm sure the process will go more smoothly with a little better understanding of exactly how pdb works. I'll share more about the landslide monitoring project after these upcoming talks.

Resources

You can find the relevant code from this post in the sitka_irg_analysis GitHub repository; the main file is process_hx_data.py. Please note that this project hasn't seen much refactoring. Once this approach to landslide monitoring was shown to have value, I started focusing on creating a real-time monitoring tool.